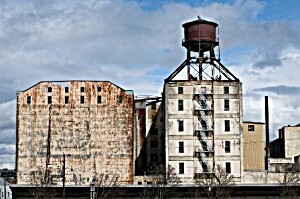

Being a big fan of Mikado biscuits (one of the key brands of Jacob’s Biscuit), I have been watching with interest to see what would become of the former Jacob’s Biscuits factory outside of Dublin, Ireland, where they had been produced for years before closing their doors in 2008. It was no surprise to me when Amazon bought the property and announced plans to build a data center there. A site with adequate power, accessibility and location just 20 km from other major data centers west of Dublin, it seems a logical site for Amazon's next data center in Ireland, and very much in line with the trend to re-purpose existing sites as data centers.

Being a big fan of Mikado biscuits (one of the key brands of Jacob’s Biscuit), I have been watching with interest to see what would become of the former Jacob’s Biscuits factory outside of Dublin, Ireland, where they had been produced for years before closing their doors in 2008. It was no surprise to me when Amazon bought the property and announced plans to build a data center there. A site with adequate power, accessibility and location just 20 km from other major data centers west of Dublin, it seems a logical site for Amazon's next data center in Ireland, and very much in line with the trend to re-purpose existing sites as data centers.

This trend has been going on for years and has included such disparate projects such as the MareNostrum supercomputer in a former chapel in Barcelona, as well as an i/o co-location data center which is ironically located inside a former New York Times printing site in New Jersey.

Some are re-using the existing super-structure and power infrastructure, which was NOT originally built with data centers in mind, and installing purpose-built, modular data centers inside. This allows them to truly match supply and demand.

The CSC Data Center in Finland is a prime example. Built inside a former paper mill in Kajaani, the CSC data center makes use of the CommScope’s Data Center on Demand (DCoD) to meet their needs on a modular, pay as you grow basis.

Not only did CSC make use of the capacious power infrastructure built up to support the original paper mill, they also leveraged the crisp, year round weather prevalent throughout most of Europe that allows direct free air cooling. The combination of these enables a rapid and cost effective data center roll-out, re-purposing our industrial heritage for the 21st century.

How has direct free air cooling come to be so accessible, and why hasn’t it been used more widely in the past?

As the second-largest energy expenditure in data centers (following powered IT equipment), data center cooling is closely scrutinized for any potential savings that can be achieved.

In the last decade, both the IT equipment and HVAC/Building Services vendors have risen to the challenge, building upon work by organizations such as ASHRAE’s Technical Committee 9.9, which defined several different permissible temperature and humidity ranges for the data center cold aisle.

In recent years, each ASHRAE-defined range, or operational envelope, has been larger than the previous range resulting in lower cooling costs. Both the IT equipment OEMS & the HVAC industry build their equipment with these ASHRAE envelopes in mind with recently designed IT equipment rated for the less onerous ASHRAE A2A or A3A. An example of this is the Dell Fresh Air Server.

Similarly the newer data center HVAC systems coming on stream capitalize on these envelopes with cold aisle/hot aisle containment, direct free air cooling, and adiabatic cooling, as offered in CommScope’s DCoD with SmartAir. The convergence of these two technical trends, enabling the use of wider temperature and humidity ranges, provides tremendous savings on the cost of conditioning the cold aisle air, and as we have seen with the CSC data center, omits the need to install a chiller to cool.

Of course, all of this could change if Microsoft makes good on their plan to build data centers under the sea. This seems a ways off, but until then look for the trend of repurposing existing sites, and adopting direct free air cooling to continue.

Have you looked into direct free air cooling for your data center?