Data centers are becoming more efficient. Companies are utilizing hardware, virtualization and cloud services to increase their data storage capacity while reducing their data centers’ footprints. Using these modern methods and storage architectures should mitigate the risk of downtime, yet a recent study by the Uptime Institute shows that 31 percent of IT management, critical facilities management and senior executives surveyed experienced “an IT downtime incident or severe service degradation in the past year.”

Data centers are becoming more efficient. Companies are utilizing hardware, virtualization and cloud services to increase their data storage capacity while reducing their data centers’ footprints. Using these modern methods and storage architectures should mitigate the risk of downtime, yet a recent study by the Uptime Institute shows that 31 percent of IT management, critical facilities management and senior executives surveyed experienced “an IT downtime incident or severe service degradation in the past year.”

Can you prevent downtime?

Sometimes incidents are not preventable, no matter how hard you try; however, what’s interesting is that those surveyed who reported failures, 80 percent stated that their most recent failure could have been prevented. In this study, 33 percent state the leading causes of downtime was “onsite power failure” followed closely by “network failure” at 30 percent.

I am surprised that onsite power failures are still listed as one of the leading causes of IT downtime. Redundant power supplies and backup generators are easy to deploy to avoid this pitfall. At least, this equipment can delay the loss of power until equipment can be properly shut down and data can be diverted to a backup method (hopefully, backup measures for data storage are in place).

Network failure is an entirely different conversation. When IT managers look at a data center, the major concern is with the storage equipment functioning properly. But what about the network? Operators should be able to connect everything and go, right? Wrong. What happens when they need to add/remove a server to the network, and they unplug the wrong port? What happens when an unscheduled change is made, and it takes down half the network?

CLICK TO TWEET: When 80 percent of IT downtime can be prevented, it's time for a management tool that works.

Preventing a network failure

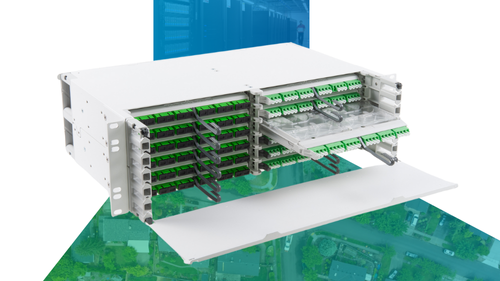

Even though accidents can happen, most network failures are preventable. The first step is setting up a network structure that works for your needs today and the possibility of future expansion. As companies or clients grow, planning can save operators a lot of headaches and reduce the risk of an error leading to downtime.

Once a network is planned, operators can select reliable components and cabling where the end-to-end solution is verified. Even if operators think the cabling will be replaced in two years, dealing with a network outage because of a faulty cable is an avoidable headache.

In a perfect world, once the network is in place and operational, operators should be able to walk away without any issue. Unfortunately, it is not a perfect world. Changes will need to be made, servers added, servers removed, etc. If ports need to be added or removed, finding the right port is not easy and can be time-consuming. The process leaves a lot of room for human error leading to a network outage.

Wouldn’t it be valuable to know in real time:

- What is connected to the network?

- How it is connected?

- Where connected devices are located?

- When changes are made?

These types of situations can be achievable by using a reliable automated infrastructure management (AIM) system. An AIM system not only monitors which ports are live and which ones are not, it also can locate ports which need attention during an emergency, create automated work orders, and track when changes are made.

Sounds great, right? Let us know how you’re managing your data center in the comments below.